¶  Quick start guide

Quick start guide

Be sure to meet our minimal requirements.

Lyosis is recommended to be installed on a server but can also run on your work station. See here why.

Starting a FLS Manager instance requires a standardized execution platform: Docker.

Please refer to https://docs.docker.com/get-docker/ to follow the installation procedure on your system.

Ensure Docker is running at startup by following the additional lasts steps of this procedure.

With Docker running, FLS Manager can be easily started:

- log into our Docker registry. Contact us to get your credentials.

docker login registry.bonemonitor.com

- Create a folder to hold data on host system

mkdir ~/flsmanagerdata

-

- Run the latest version of the application

docker stop -t 120 flsmanager

docker rm flsmanager

docker pull registry.bonemonitor.com/fls-database

docker run \

--detach \

--name flsmanager \

--volume ~/flsmanagerdata:/data \

--publish 443:8080 \

--env PASSPHRASE=UnicornsAreReal! \

--env EXTERNAL_URL=https://localhost \

registry.bonemonitor.com/fls-database

-

Reboot your computer after installing Docker.

-

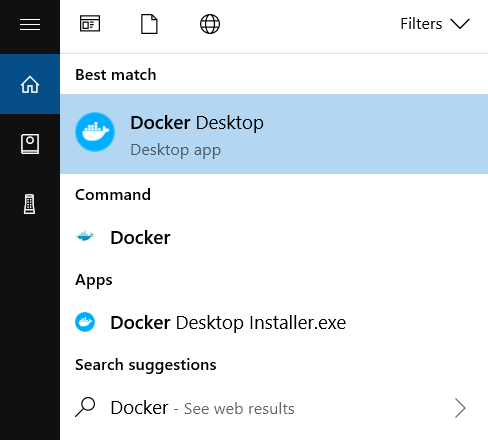

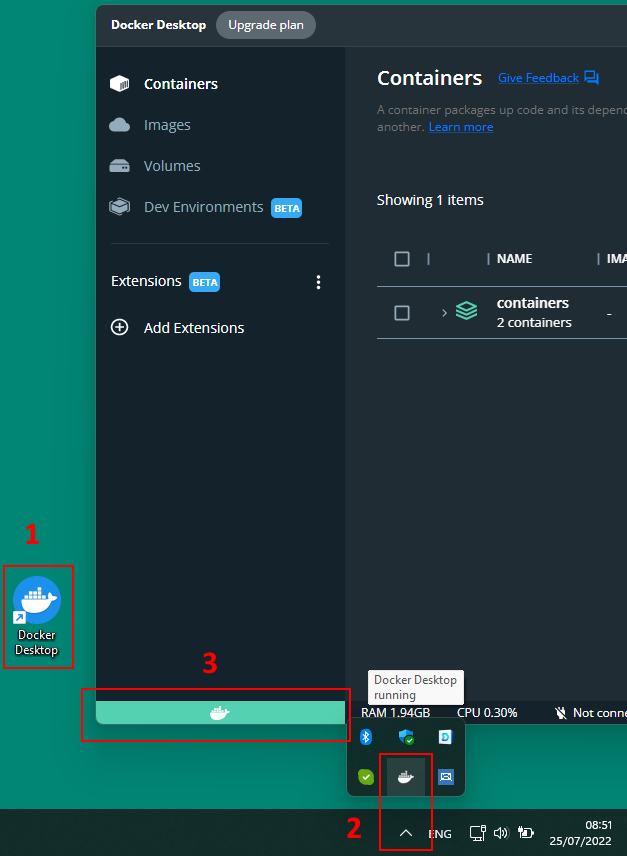

Start

Docker Desktopby searching for Docker, and select Docker Desktop in the search results:

-

Make sure Docker Desktop is running. How?

-

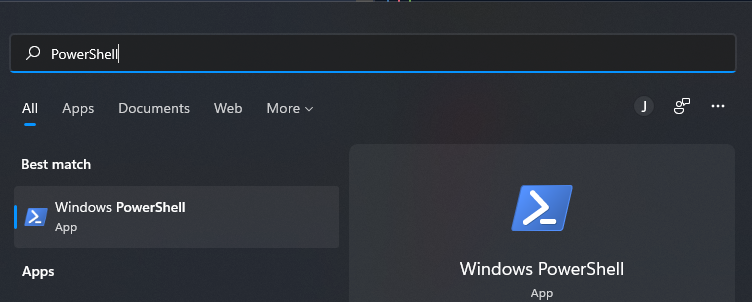

Open a PowerShell terminal

-

log into our Docker registry. Contact us to get your credentials.

docker login registry.bonemonitor.com

- Create a folder to hold data on host system

cd "$env:HOMEPATH"

mkdir flsmanagerdata

- Run the latest version of the application

docker stop -t 120 flsmanager

docker rm flsmanager

docker pull registry.bonemonitor.com/fls-database

docker run --detach --name flsmanager --volume ${pwd}/flsmanagerdata:/data --publish 443:8080 --env PASSPHRASE="UnicornsAreReal!" --env EXTERNAL_URL=https://localhost registry.bonemonitor.com/fls-database

For the first usage, you might be prompt by Docker Desktop to share the folder.

¶

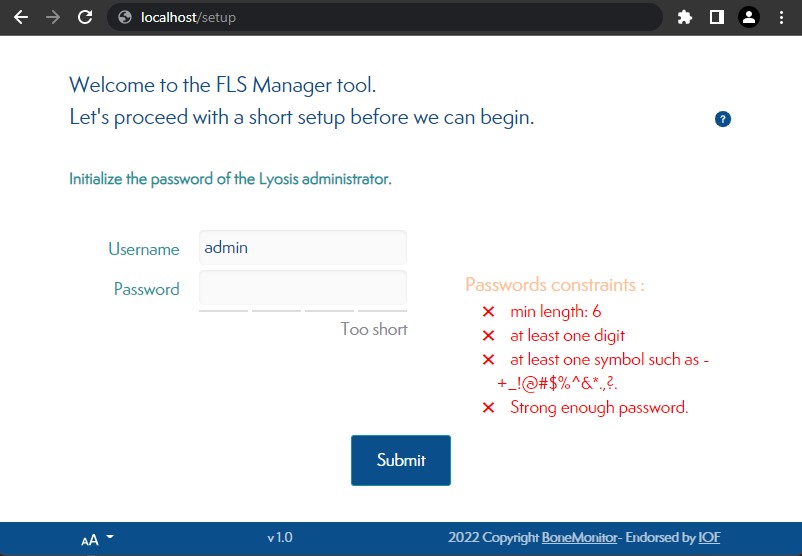

Congratulations!

The application is now running athttps://localhost.

Follow the wizard to setup theadminaccount's password and proceed to the initial setup.

You may continue with the default TLS certificate and trust our default one.

Just click the Advanced button and Proceed (or Continue) to access the application. More information why.

You can now take the first steps to customize your application.

¶ Installation guide

The following sections contains important information to turn your local test to a production ready application.

¶  Where to store data

Where to store data

It is important to mount /data to the host system for data persistence across container lifecycles. The -v /my/own/datadir:/data part of the command mounts the /my/own/datadir directory from the underlying host system as /data inside the container, where FLS Manager will write its data files.

If the /data folder is not mounted to the host system, data will be lost when the container is stopped.

Backups will be generated inside the folder.

¶  Environment variables

Environment variables

| Variable | Description | |

|---|---|---|

PASSPHRASE |

Mandatory | Passphrase used for data encryption. Allowed chars are a-z, A-Z, 0-9 or any of _!@#$%^&*. Please avoid spaces or (single/double quotes). |

EXTERNAL_URL |

Mandatory | The external URL as accessed by clients. Examples: https://lyosis.com or https://fls.internal. It should not end with a /. |

TZ |

Optional | Time zone. Defaults to Etc/UTC. Example value Europe/Brussels. List of valid time zones codes here. |

Do not lose your PASSPHRASE. It is the key used to encrypt/decrypt your data. You will also need it to restore a backup. It can not be changed after first run or recovered if lost.

¶  Backup

Backup

Every 12 hours, the FLS Manager will generate a backup inside /data/backup (mounted to host's ~/flsmanagerdata in the above run command).

It is the local system administrator's responsibility to archive and historize it. There's no backup file rotation. New backups will overwrite previous ones.

The archive produced is encrypted. You will need you PASSPHRASE to restore it.

If you want to increase the backup frequency, you can trigger it manually (or from a cron job) from host system:

docker exec flsmanager backup

(Given the running container name is flsmanager)

Warning: this task can take many resources, it is up to the local system administrator to decide what is the best backup frequency. A backup every 5 minutes would be very reassuring against data loss, but your system might not be able to withstand it if the database contains millions of records.

¶  Secure your backup

Secure your backup

To be effective, a backup should not be stored on the same machine than the application itself.

After a backup archive has been created, you should send it to another storage and delete it from /data/backup.

The FLS Manager application will warn you if a backup has been overwritten. Here are the notices the application would send if the backup has not been properly secured:

| Step | Who | Message displayed |

|---|---|---|

| App start | At application start, no warning if the backup does not exist yet | |

| First backup run | If backup is successful, nothing | |

| Second backup run | Users with role Administrator IT |

If the second run has overwritten a previous backup archive /data/backup/backup.xb.gz.enc, a warning will be displayed. This warning will remain as long as the file /data/backup/backup.not.secured.1.warning exists. |

| Third backup run | Users with role Administrator IT and Administrator FLS |

If the third run has overwritten a previous backup archive /data/backup/backup.xb.gz.enc, an error will be displayed to any users of those two roles. This error will remain as long as the file /data/backup/backup.not.secured.2.warning exists. |

| Any backup error | Users with role Administrator IT and Administrator FLS |

On any backup error (no space left on disk, target folder /data/backup/ not writable, ...) an error will be displayed to any users of those two roles. This error will remain until a backup is successfuly created. |

Example of cronjob on the container's host machine:

36 14 * * * /usr/bin/sshpass -p ${REMOTE_USER_PASS} /usr/bin/rsync -avzhog -e "ssh -p ${NAS_PORT}" /home/flsmanager/data/backup/backup.xb.gz.enc ${REMOTE_USER}@${NAS_HOST}:/volume1/backup/ && rm -f /home/flsmanager/data/backup/backup.*

- Where

36 14 * * *is the cron job everyday at 14:36. See https://crontab.guru/. ${REMOTE_USER_PASS}is the remote ssh user's password-e "ssh -p ${NAS_PORT}"is used to connect to the rsync server to a custom ssh port/home/flsmanager/data/backup/backup.xb.gz.encis the path of the local backup archive file generated by the Docker container, on the host machine${REMOTE_USER}@${NAS_HOST}is the remote user@host to connect to./volume1/backup/is the remote path where to send the filerm -f /home/flsmanager/data/backup/backup.*if successful, remove the local files (archive AND warnings)

¶  Restore

Restore

The restore procedure deletes all current data. It will replace them by those given in the archive. Double check what you are doing.

To be able to restore the FLS Manager application from a backup those elements are needed:

- The FLS Manager software as a docker image.

- A backup archive. This is the

gz.encfile that was generated in/data/backup - The

PASSPHRASEof the backup archive. This is thePASSPHRASEenvironment variable value passed to the docker container that issued the bakup archive.

Follow those steps:

- Ensure the FLS Manager is running with the same

PASSPHRASEas the backup archive. Else refer to the next section. - Copy the data backup archive into

/data/restore/backup.xb.gz.enc. The archive must have exactly this name. - On host system, import the backup with this command line:

docker exec flsmanager restore

(Given the running container name is flsmanager)

Data have been restored.

¶ Restore a backup with a different PASSPHRASE

You can also restore a backup archive that was generated with another PASSPHRASE than the one used by your running instance. In that case just provide it as an addition argument:

docker exec flsmanager restore backupArchivePassword

(Given the backup archive password is backupArchivePassword.)

¶  Update Lyosis

Update Lyosis

To get the latest version of Lyosis see our dedicated update page.

¶  Reset the application

Reset the application

Data can be lost, read instructions carefuly.

If you want to delete all data from your database, after a bad CSV import for instance, you can follow those steps:

- stop the container

docker stop flsmanager && docker rm flsmanager - remove the database files on host system

rm data/mysql -rf - restart the applicatiopn (see Start a FLS Manager instance above)

You could also delete the whole folder mounted as /data. The application will re-create the directory structure. But be aware that /data/backup might contain a backup you don't want to lose.

¶  Forgotten password

Forgotten password

Follow those steps:

- Ensure the FLS Manager is running.

- On host system, reset the

admin(for instance) password with this command line:

docker exec flsmanager reset_password admin un1cornsAreReal!

(Given the running container name is flsmanager)

This would force the account with username admin to have un1cornsAreReal! as password.

Having plain text passwords used in a command might raise security issues. Consider starting it with space (

) to avoid logging it to your.bash_historyfor instance.

¶  Going to production with real data

Going to production with real data

Please go trough this checklist:

Be sure to not use the default PASSPHRASE to encrypt your data.

Ensure backup are secured.

Provide a TLS certificate for HTTPS.

¶  Troubleshooting

Troubleshooting

¶ Make sure Docker Desktop is running

Such error can be seen if Docker Desktop is not started on your local computer:

PS C:\Users\BoneMonitor> docker login registry.bonemonitor.com

Username: mccoy

Password:

error during connect: This error may indicate that the docker daemon is not running.:

Post "http://%2F%2F.%2Fpipe%2Fdocker_engine/v1.24/auth": open //./pipe/docker_engine: The system cannot find the file specified.

To solve this issue, you just have to make sure Docker Desktop is running:

- Double-click the

Docker Desktopicon on your desktop - Look in the icon tray near the clock and click once on the Docker Desktop icon

- The Docker Desktop application should appear. Ensure it displays a green status bar.

You can now proceed with the installation or your already installed applications.

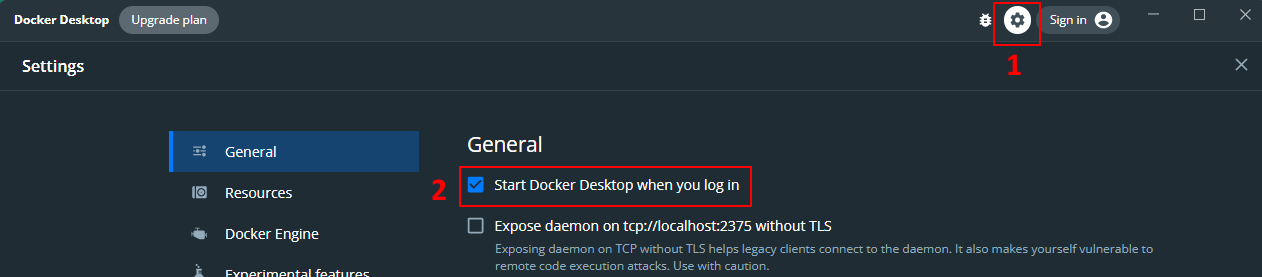

Tip:

Docker Desktopcan be automatically started when you log in into your computer:

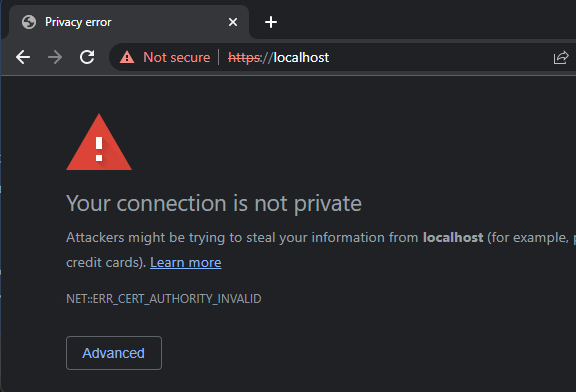

¶ My browser shows a warning about an  invalid HTTPS (TLS) certificate

invalid HTTPS (TLS) certificate

You may continue with the default TLS certificate and trust our default one.

Just click the Advanced button and Proceed (or Continue) to access the application.

The application exposes an unique TLS port, secured by a self-signed certificate to cipher all HTTP trafic by default.

To have a fully compliant TLS certificate, place a proxy (i.e. HAProxy or Traefik) in front of the application.

¶ Can I run Lyosis without internet access?

Yes, you can.

At anytime you can download our latest release here (contact us to get your credentials), transfer it safely to the isolated server and then use docker load to load our image locally.

See Docker's documentation for reference.

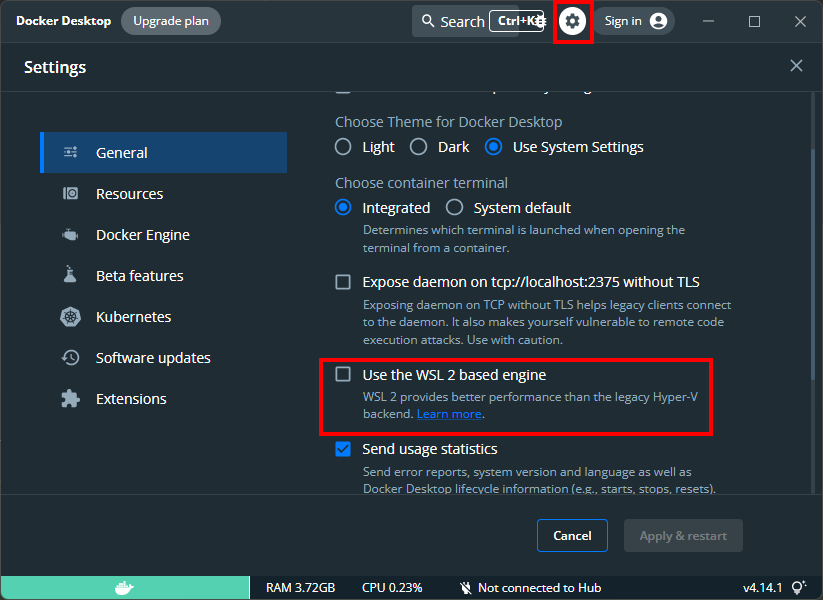

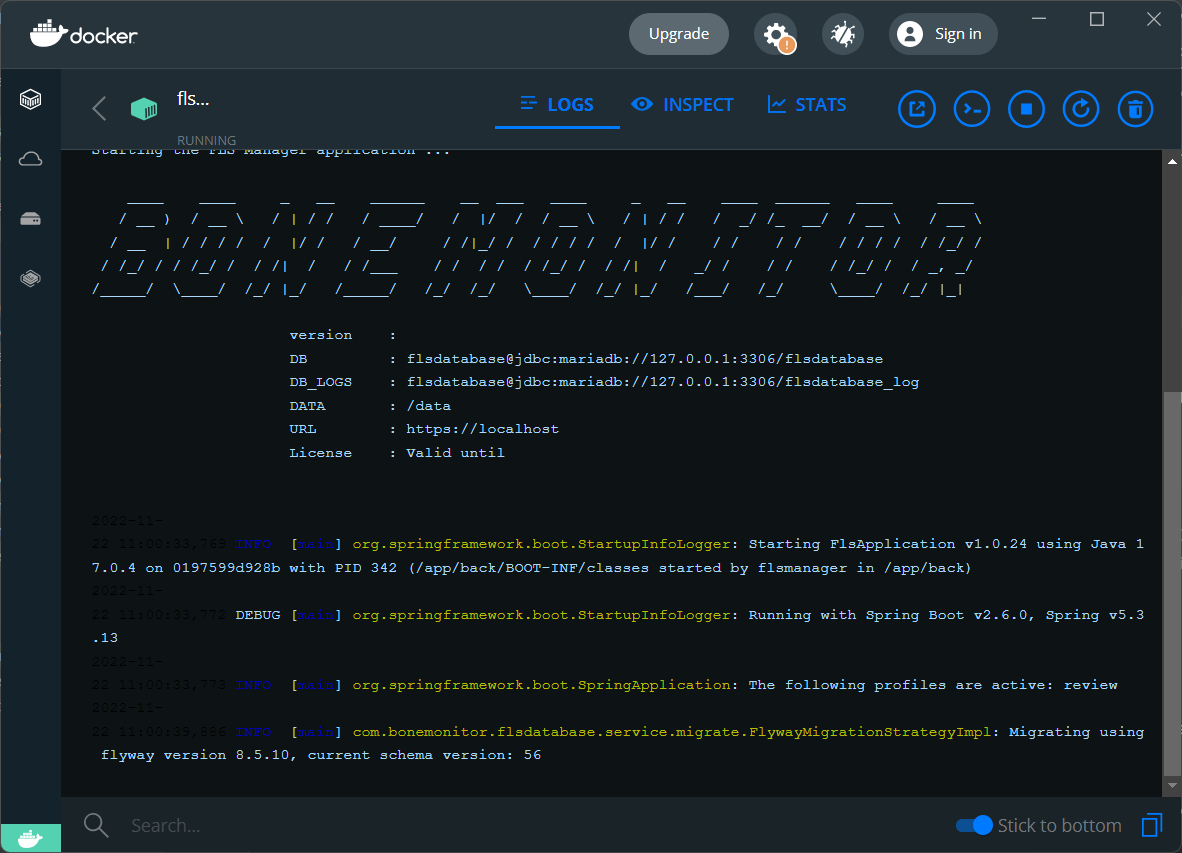

¶ Lyosis can't start properly on Docker Desktop Windows

If you try to run Lyosis on Windows with Docker Desktop, the application might not start properly.

Symptoms are, the application hangs on the message Migrating using flyway ...

(To see those logs, open Docker Desktop, click containers, then click on flsmanager)

In that case, the solution is to disable Use the WSL 2 base engine integration setting in Docker Desktop: